Hey there, tech enthusiasts! Picture this: Eight of the world's smartest AI models walk into a chess arena, and what happens next absolutely breaks the internet. Google just launched the Kaggle Game Arena—where AI doesn't just answer questions, it actually thinks, strategizes, and competes in real-time battles that reveal who's truly got the brains.

This isn't your typical "AI beats human" story. This is AI versus AI in the most transparent intelligence test we've ever witnessed, and the results? Some underdogs just rewrote the rulebook!

The Arena Revolution: Beyond Static Tests

Forget those boring multiple-choice benchmarks where AI models repeat memorized answers. Google's Game Arena flipped the script entirely:

Dynamic Intelligence Testing: Unlike traditional benchmarks AI can "study for," chess evolves in real-time with infinite possibilities that can't be memorized

Real Strategic Thinking: Models must plan multiple moves ahead, adapt to opponents, and handle uncertainty—exactly what we need AI to do in the real world

Complete Transparency: For the first time ever, we can watch AI "think" through each move without black-box mystery

Pure Reasoning Test: These aren't specialized chess engines—they're general-purpose models using raw intelligence

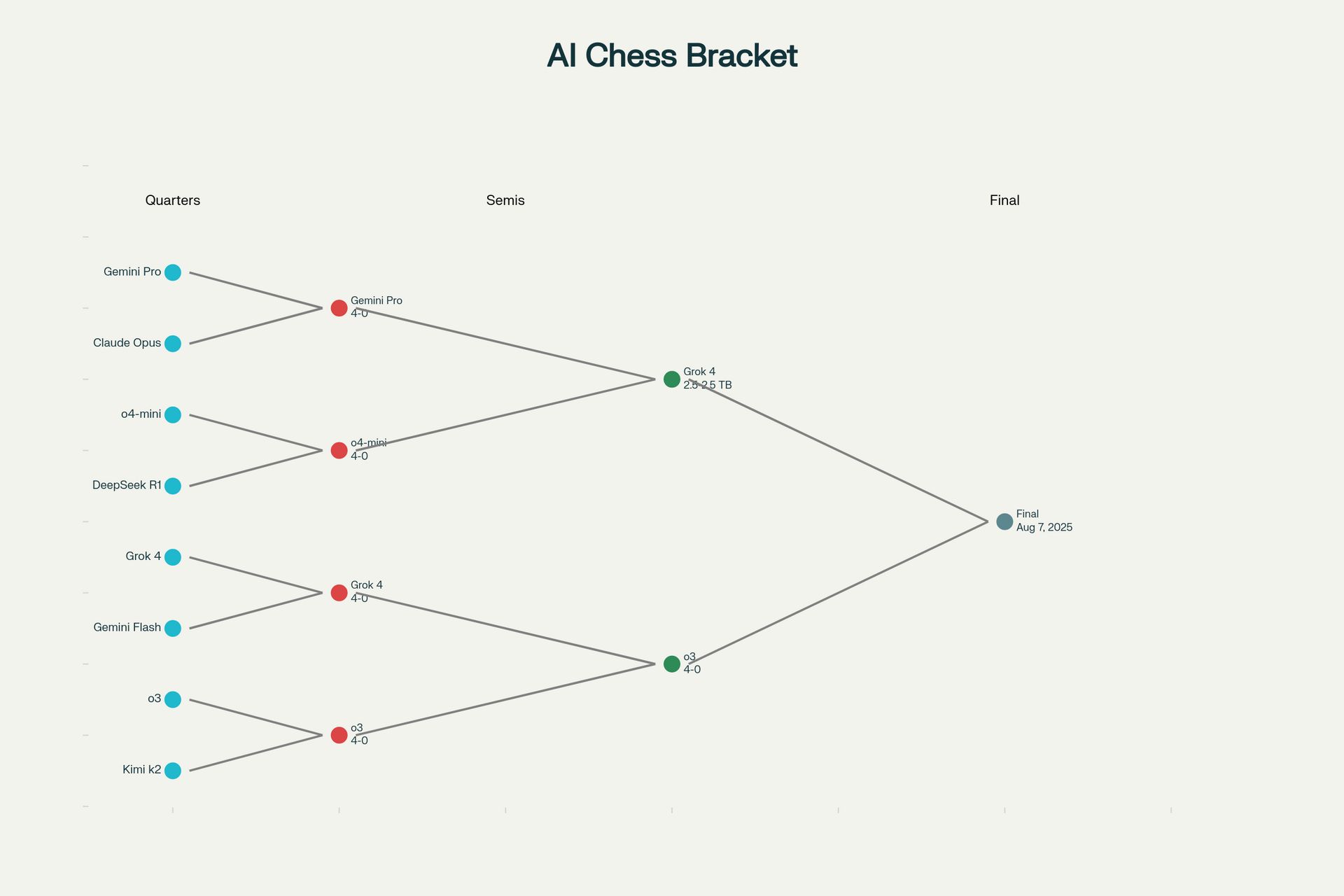

The tournament featured eight champions: Google's Gemini models, OpenAI's o3 and o4-mini, Anthropic's Claude 4 Opus, xAI's Grok 4, DeepSeek R1, and Moonshot's Kimi k2.

We all love AI tools like ChatGPT—but what if it could only access your company’s information?

That’s exactly what CustomGPT.ai does.

You upload your docs, PDFs, website, or knowledge base—CustomGPT gives your team instant, 100% accurate answers, backed by source citations.

✅ No hallucinations

✅ SOC 2 + GDPR compliant

✅ 10 AI agents included

✅ No-code, no training required

Shocking Results: David Slays Goliaths

The semifinals delivered plot twists nobody saw coming:

Grok 4's Stunning Victory: Defeated Google's own Gemini 2.5 Pro in a nail-biting tiebreaker, despite Elon Musk admitting xAI "spent almost no effort on chess"

Strategic Brilliance: Grok 4 played with intentional strategy, identifying weak spots and exploiting undefended pieces

o3's Dominance: Crushed its smaller sibling o4-mini 4-0, advancing to championship final

Personality Differences: Each AI developed distinct playing styles—some aggressive, others defensive, proving models are developing unique "personalities"

Most fascinating? Games ended with illegal move forfeitures rather than checkmates, showing these models face the same visualization challenges humans do.

Kaggle Game Arena Tournament Bracket: The path to the championship between Grok 4 and o3

The $37 Billion Gaming Revolution

This tournament isn't just entertainment—it's revealing a market explosion reshaping entire industries:

Market Explosion: AI in gaming rockets from $5.85 billion (2024) to $37.89 billion by 2034—a staggering 20.54% annual growth

Testing Revolution: AI-powered game testing delivers 70% faster cycles, 85% more accurate bug detection, and 50% cost reduction

World Generation: Google's Genie 3 creates interactive 3D environments from text prompts in seconds, opening infinite training grounds

Development Transformation: Solo developers can now create AAA-quality experiences using AI assistance for procedural content, intelligent NPCs, and automated testing

Companies are replacing months of human testing with AI agents that spawn infinite copies for multiplayer testing—like having an unlimited army of dedicated game testers.

Looking Ahead

We're witnessing the birth of truly strategic AI that thinks, adapts, and competes like humans.

Championship final between o3 and Grok 4 isn't just determining the best chess AI—it's showcasing the emergence of artificial intelligence that genuinely thinks. The game has changed, literally, and the next move could reshape how we build, test, and deploy AI forever! ♟️