Here's what Sam Altman didn't tell you in his carefully crafted summer announcement: OpenAI has already burned through over $1 billion on GPT-5 development, with at least two catastrophic training runs that completely failed. Each six-month training cycle costs approximately $500 million in compute alone—that's more than the GDP of some small countries, all spent teaching a single AI system to think.

While other newsletters are recycling the same "GPT-5 is coming" headlines, here's the real story unfolding in Silicon Valley's server farms and boardrooms.

The Technical Leap That Changes Everything

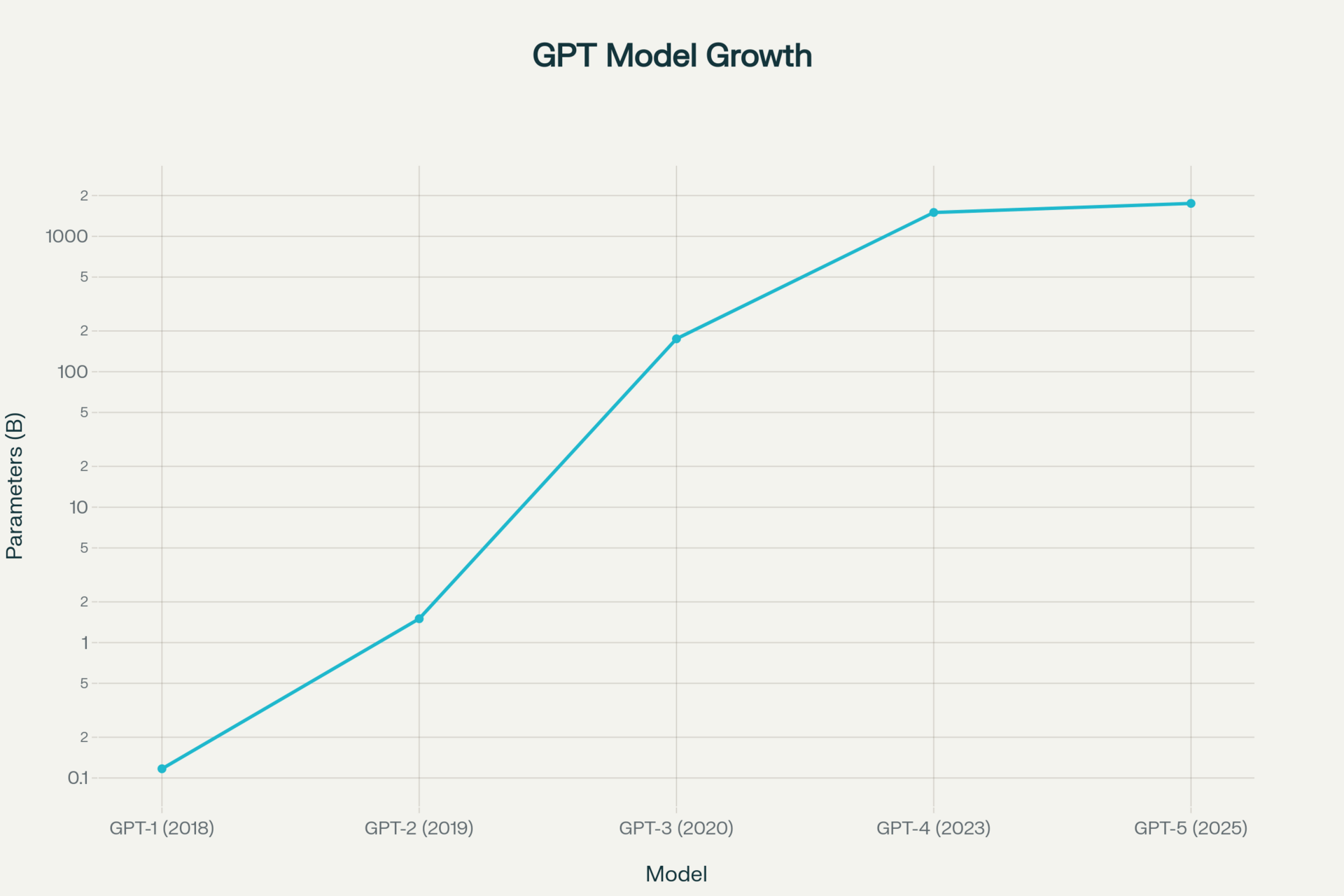

GPT-5 isn't just bigger—it's fundamentally different. With an estimated 1.5 trillion parameters (compared to GPT-4's estimated 1.76 trillion), the real breakthrough lies in its unified architecture.

Think of current AI models like a Swiss Army knife where you manually select each tool. GPT-5 is the first model that automatically knows when to think fast or slow, eliminating the confusing "model picker" that even OpenAI admits they hate. This Chain-of-Thought (CoT) reasoning is baked directly into the core architecture, not bolted on as an afterthought.

The exponential growth of GPT model parameters from GPT-1 to the projected GPT-5, showing the massive scale-up in AI model complexity

What this means in practice: Ask GPT-5 to debug your code, and it'll automatically engage deep reasoning mode. Ask for a quick summary, and it responds instantly. No more choosing between GPT-4 for creativity and o1 for logic—one model does it all.

Here's what the enterprise world is quietly preparing for: GPT-5 will likely be offered free to maintain OpenAI's market position, despite the astronomical development costs. This mirrors the classic tech playbook—subsidize adoption to capture market share, then monetize through enterprise features and API usage.

The competitive clock is ticking faster than anyone admits. Industry AI models predict that within 3-6 months of GPT-5's launch, competing models from Anthropic, Google, and DeepSeek will match its performance. This isn't speculation—it's the new reality of AI development cycles.

NVIDIA GPU-powered servers inside a state-of-the-art data center powering AI training workloads (AI generated)

What Your Competitors Are Missing

It looks like a lot of people are overlooking the small changes that could completely transform entire industries.

Enterprise Disruption: Companies spending millions on specialized AI tools may find GPT-5 renders 70% of their SaaS stack obsolete. The average enterprise uses 254 different software applications—GPT-5's unified capabilities could consolidate dozens of these into a single interface.

Training Data Crisis: OpenAI has essentially run out of high-quality internet data to train on. GPT-5's training required creating entirely new synthetic datasets and proprietary content—a $100+ million data acquisition effort that's rarely discussed publicly.

Infrastructure Reality: The model requires 250,000-500,000 NVIDIA H100 GPUs for training. To put this in perspective, that's roughly 10-20% of NVIDIA's entire annual H100 production capacity dedicated to a single model.

The Unification Revolution

This is the end of AI model confusion. GPT-5 represents the first successful merger of multiple AI architectures into one system:

Multimodal by default: Text, images, audio, and video processing without switching tools

Adaptive intelligence: Automatically selects the optimal response strategy for each query

Extended memory: Maintains context across much longer conversations and documents

Built-in reasoning: No more trade-offs between speed and logical thinking

What Happens Next

Summer 2025 will be remembered as the inflection point where AI moved from "helpful" to "indispensable." Based on insider development timelines, expect GPT-5 between August and September 2025, with early enterprise access beginning weeks earlier.

The three-phase rollout strategy:

Phase 1: Limited enterprise beta (July 2025)

Phase 2: ChatGPT Plus integration (August 2025)

Phase 3: Free tier access with usage limits (September 2025)

Bottom line: While others debate capabilities, smart organizations are already restructuring their AI strategies around unified intelligence. The companies that recognize this shift earliest will gain an 18-month advantage before the market catches up.

The age of AI model switching is ending. The age of AI thinking is beginning.